Neural networks trained with back-propagation have proven to be quite remarkable in a variety of tasks. However one thing I find very annoying is that the effectiveness of neural network largely depends on the design of its structure i.e. how many hidden layers, how many nodes in each layer, use of convolution or recurrent etc. These models are usually very expensive to train and thus make the trial and error approach even more cost prohibitive. Moreover, once a good structure has been fixed to solve a particular task, it may not generalize well to solve problems in other domains. This is very different from our brain, whose structure is highly dynamic and evolves over time. Intuition has been guiding the researchers to design the structure so far. In all due respect, I believe that intuition is important and will always have an important role to play in the development of the field. Meanwhile, I find the work on Neuroevolution highly attractive as it attacks this problem head-on with a novel approach that is biologically plausible.

I am talking about a specific approach called NEAT: evolving neural networks through augmenting topologies It finds its root in genetic algorithm ( for readers without much biology background, sorry that there will be some biology concepts ) The basic idea behind genetic algorithm in the context of optimization is that you start out with a pool of random simple solutions to a problem, and gradually evolve the simple solutions to more complex solutions that give better result. The way to evolve is by either mutation or mating ( sounds a bit similar to how nature evolves us? ). The evolution happens by chance, the newly evolved networks will be added back to the genetic pool. After each step, we will evaluate the performance of each network and only the good ones will be kept to continue to evolve.

The “survival” of a network is determined by how well it performs the task relative to the other networks. A simple algorithm is to keep the best 50% of the networks in the pool before the start of next step. An important insight is that the network of more complex structure usually takes longer to adapt to a satisfactory solution than a simpler structure, causing the more complex structures to “die” before fully reaching their potential. The trick is to categorize the networks according to their structural complexity into different “species” of networks and the principal of “survival of the fittest” is only applied to each species separately ( this is one of the main departures from general genetic algorithm that is proposed by the paper )

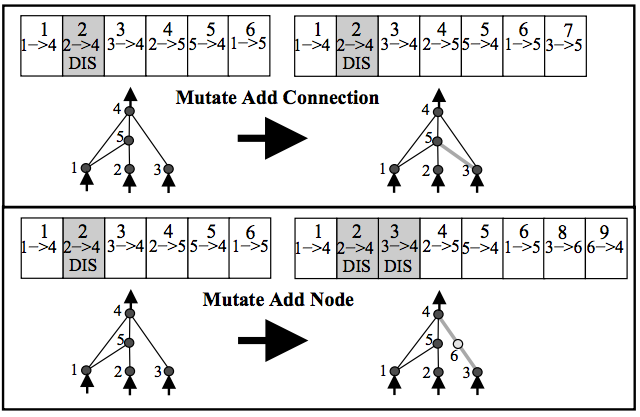

So we start out with a pool of simple neural networks without any hidden layer ( this is so called “genetic pool” ) At each step, mutation either adds a new node in an existing edge or adds a new edge between two nodes to the network, changing its structure. The weight of the edges can also be perturbed in each step, and better weights can be obtained through this evolutionary approach, or they could be trained using standard back-propagation as in any neural network.

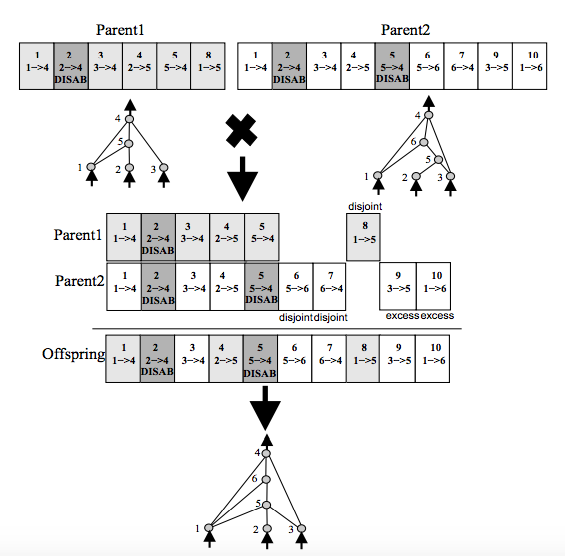

Mating happens between two neural networks in the pool, usually within the same species. How does it work when two networks have different number of nodes and edges? It is not obvious how do we align the nodes and edges up between these two networks. It turns out that we can give each new node/edge a label that identifies the parent of the network. This way we can align up the nodes/edges that are inherited from the same parent, keep them unchanged, and only merge the “novel” node/edge of the children by either randomly selecting or selecting according to their relative “fitness”.

To me, this idea is super helpful for us to achieve general brain. As a biological brain can grow new neurons and synapses with a highly dynamic and adaptable structure. It seems almost impossible to me that we can come up with one static network whose structure is fixed to simulate our brain.

I have tried the main idea behind NEAT and will highly recommend interested audience to the original paper by Kenneth Stanley. In the end, I’ll share a fun youtube video where this approach has been applied to play flappy bird and hope to read more development along this line of research in the future.